Ant Group's technology team has recently announced the official open-sourcing of Ring-lite, a lightweight inference model that has achieved remarkable results in various inference benchmarks, setting a new state-of-the-art for lightweight inference models and further validating the potential of the Mixture of Experts (MoE) architecture in inference tasks.

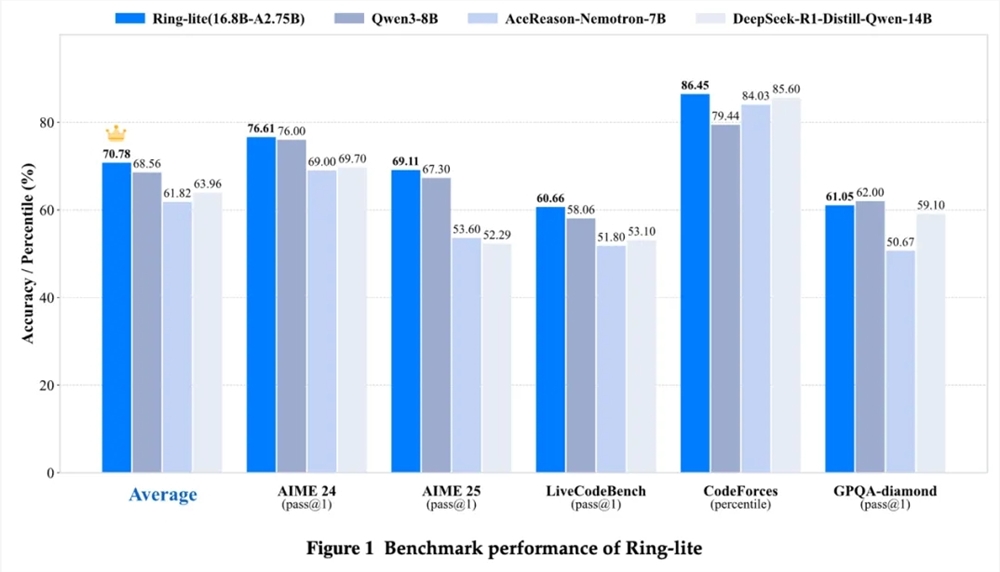

Building upon the foundation of Ling-lite-1.5, Ring-lite employs the MoE architecture with a total parameter count of 16.8 billion, but with an active parameter count of only 2.75 billion. Leveraging the innovative C3PO reinforcement learning training method, Ring-lite has demonstrated exceptional performance in several inference benchmarks, including AIME24/25, LiveCodeBench, CodeForce, and GPQA-diamond, rivaling dense models with three times the active parameter size and under 10 billion parameters.

In terms of technical implementation, the Ring-lite team has introduced several innovations. The pioneering C3PO reinforcement learning training approach effectively addresses the optimization challenges caused by fluctuating response lengths in RL training, significantly improving training instability and throughput fluctuations. Additionally, the team explored the optimal training ratio between Long-CoT SFT and RL, proposing a token efficiency-based solution based on entropy loss to balance training performance and sample efficiency, further enhancing the model's capabilities.

Ring-lite also tackles the challenge of joint training on multi-domain data, systematically validating the strengths and weaknesses of mixed training and phased training. It has achieved synergistic gains across three domains: mathematics, code, and science. In various complex reasoning tasks, Ring-lite has consistently demonstrated outstanding performance, particularly in mathematical reasoning and programming competitions, outscoring comparative models.

To validate Ring-lite's practical application effectiveness, the team conducted tests on high school math and physics problems. The results show that Ring-lite can achieve a score of around 130 points on the national math exam, an impressive performance.

Ant Group's technology team stated that the open-sourcing of Ring-lite includes not only model weights and training code but will also gradually release all training datasets, hyperparameter configurations, and even experimental records. This could be the first time a lightweight MoE inference model has achieved full transparency throughout the entire process, providing valuable reference resources for researchers in related fields.

GitHub: https://github.com/inclusionAI/Ring

Hugging Face: https://huggingface.co/inclusionAI/Ring-lite

ModelScope: https://modelscope.cn/models/inclusionAI/Ring-lite