Anthropic, a startup founded by former OpenAI researchers, launched two new AI models, Claude Opus 4 and Claude Sonnet 4, at its inaugural developer conference. These models, part of the Claude 4 family, are claimed to be among the industry's best performers on popular benchmarks. They can analyze large datasets, execute long-horizon tasks, and take complex actions. Both models have been tuned for programming tasks, making them ideal for writing and editing code.

Sonnet 4 will be accessible to both paying users and users of Anthropic's free chatbot apps, while Opus 4 will be available exclusively to paying users. The pricing for Anthropic's API, available via Amazon's Bedrock platform and Google's Vertex AI, is $15/$75 per million tokens (input/output) for Opus 4 and $3/$15 per million tokens (input/output) for Sonnet 4. Tokens are the raw data AI models work with, and a million tokens equate to approximately 750,000 words.

As Anthropic aims to substantially grow its revenue, the company has set a target of $12 billion in earnings by 2027, up from a projected $2.2 billion this year. Recently, Anthropic closed a $2.5 billion credit facility and raised billions of dollars from Amazon and other investors to cover the rising costs associated with developing cutting-edge models.

Opus 4, the more capable of the two models, can maintain focused effort across multiple steps in a workflow, while Sonnet 4, designed as a drop-in replacement for Sonnet 3.7, shows improvements in coding, math, and instruction following. The Claude 4 family is also less prone to "reward hacking," a behavior where models take shortcuts to complete tasks.

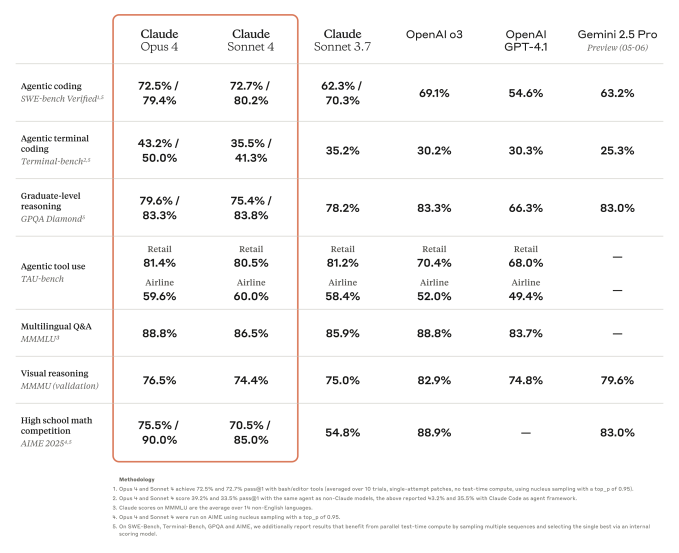

While these improvements have not made the Claude 4 models the world's best by every benchmark, Opus 4 does outperform Google's Gemini 2.5 Pro and OpenAI's o3 and GPT-4.1 on SWE-bench Verified, a benchmark designed to evaluate a model's coding abilities. However, it cannot surpass o3 on the multimodal evaluation MMMU or GPQA Diamond, a set of PhD-level questions related to biology, physics, and chemistry.

Anthropic is releasing Opus 4 with stricter safeguards, including enhanced harmful content detectors and cybersecurity defenses. The company's internal testing suggests that Opus 4 may substantially increase the ability of someone with a STEM background to obtain, produce, or deploy chemical, biological, or nuclear weapons, reaching Anthropic's "ASL-3" model specification.

Both Opus 4 and Sonnet 4 are hybrid models, capable of near-instant responses and extended thinking for deeper reasoning. With reasoning mode switched on, the models can take more time to consider possible solutions before answering. As they reason, they will show a user-friendly summary of their thought process, partially to protect Anthropic's competitive advantages.

Opus 4 and Sonnet 4 can use multiple tools, such as search engines, in parallel and alternate between reasoning and tools to improve the quality of their answers. They can also extract and save facts in "memory" to handle tasks more reliably, building what Anthropic describes as "tacit knowledge" over time.

To make the models more programmer-friendly, Anthropic is rolling out upgrades to the aforementioned Claude Code. Claude Code, which allows developers to run specific tasks through Anthropic's models directly from a terminal, now integrates with IDEs and offers an SDK that lets developers connect it with third-party applications. The Claude Code SDK, announced earlier this week, enables running Claude Code as a subprocess on supported operating systems, providing a way to build AI-powered coding assistants and tools that leverage Claude models' capabilities.

Anthropic has released Claude Code extensions and connectors for Microsoft's VS Code, JetBrains, and GitHub. The GitHub connector allows developers to tag Claude Code to respond to reviewer feedback and attempt to fix errors in, or otherwise modify, code.

Despite AI models still struggling to code quality software due to weaknesses in understanding programming logic, their potential to boost coding productivity is driving rapid adoption among companies and developers. Anthropic, aware of this, is promising more frequent model updates to deliver breakthrough capabilities to customers faster and keep them at the cutting edge.