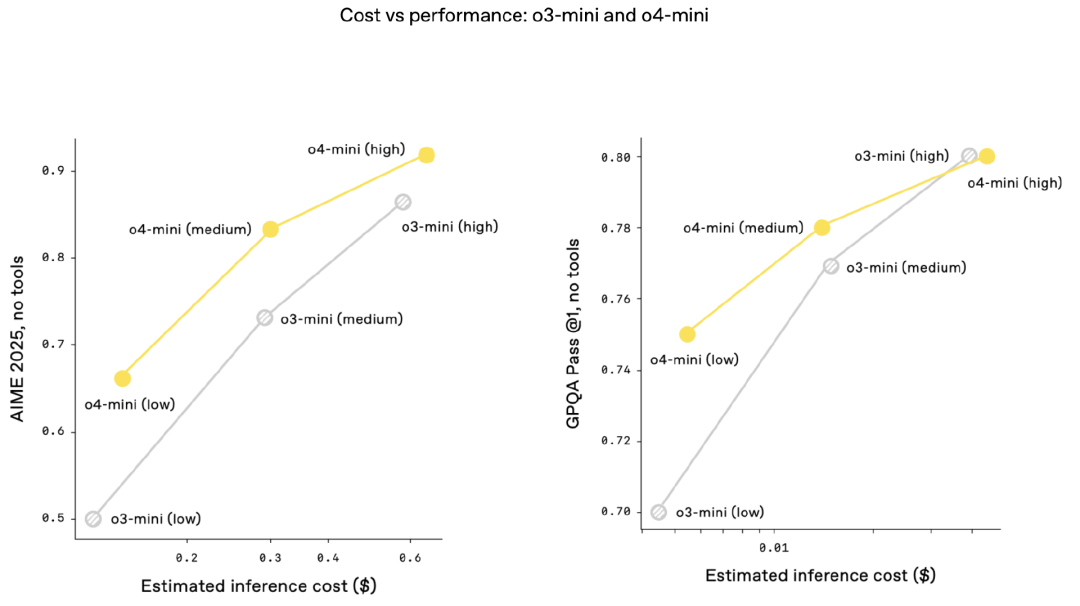

In early April 2025, while China was celebrating the Qingming Festival, Meta on the other side of the Pacific released the Llama 4 model. This model, which draws on DeepSeek's technology and uses an FP8 precision-trained MoE architecture, is a natively trained multimodal model. The release includes two sizes of models, but their actual test results were not as satisfactory as expected, causing a stir in the AI community. Subsequently, Google introduced the A2A (Agent2Agent) protocol, aimed at solving the problem of how multiple agents will communicate and collaborate in the future. This led to renewed discussions on whether MCP and A2A are substitutable or competitive. On April 16, OpenAI continued its efforts by officially releasing two new AI reasoning models, o3 and o4-mini, which enable AI to "think through images."

Looking back over the past few months since the Spring Festival of 2025, we can keenly feel the rapid changes in the field of AI. The once "three to five months of leading the trend" has now been shortened to "only two to three weeks," and this accelerating trend continues.

Models:

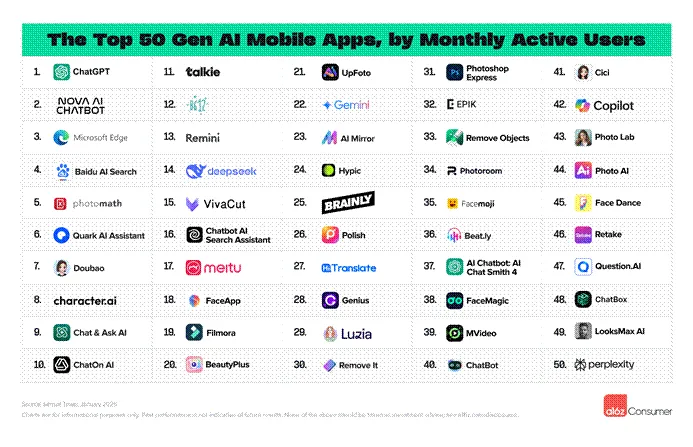

LLMs and Multimodal Fields: Alibaba's Qwen series, Google's Gemini series, OpenAI's GPT series, and DeepSeek's cutting-edge papers (such as NSA, GRM) and the MoBA model that "collided" with KIMI have all taken turns to show their strengths.

AI4S, Embodied Intelligence, and Other Directions: Unlike the large language model field, these areas have not yet converged into a unified architecture for continuous deepening and optimization. They are still exploring in multiple directions such as temporal models, graph neural networks (GNNs), graph attention networks (GATs), diffusion models, and spatiotemporal transformation networks (STTNs).

Sora-like Text-to-Video Models: Including Kuaishou's Ke Ling, ByteDance's Ji Meng, and Alibaba's Wan Xiang, these models are all based on the diffusion model paradigm. Their development pace is between that of LLMs and AI4S. Domestic media colleges and top-tier advertising companies like Ogilvy have also started to explore applications. There are already a large number of AI-generated short videos on platforms like Douyin and Kuaishou, with rapidly growing viewing traffic. It seems like a "garden full of flowers," but in reality, it is a garden of one kind of flower.

Agents:

After OpenAI released DeepResearch and Google and xAI released their respective DeepSearch, the real Agent craze was ignited by Monica's release of Manus on the early morning of March 6. Subsequently, open-source replicas such as OpenManus and OWL emerged, further driving the popularization of MCP (Model Context Protocol) and agent communication protocols (such as ANP and the IEEE SA-P3394 standard).

AI Native Applications:

Tencent quietly released the IMA app (available for download on mobile app stores), which quickly gained a good reputation among young knowledge workers.

This article, based on the above rapidly changing background, focuses on the key engine behind the leap in AI technology—the large model training process. Whether it is the much-anticipated release of Llama 4, the protocol competition in the agent field, or the rapid iteration of large model architectures, all rely on powerful computational infrastructure. In fact, the current AI competition has already entered the era driven by computational power. The scale, efficiency, and stability of computational power directly determine the iteration speed and effectiveness of large models.

Next, we will introduce the general process of large model training in detail, discuss the specific challenges, technical details, and key trends for future development.

The General Process of Large Model Training

All large models and intelligence rely on computational power. However, we are still far from the ideal large model training system. In reality, the training algorithm team, model team, and AIInfra team need to deeply integrate and gradually achieve breakthroughs from hundreds of cards to thousands, tens of thousands, and even hundreds of thousands of cards. Large model training is a complex and resource-intensive process involving multiple stages:

Model Research and Initial Launch Stage

In this stage, the model research team completes the model design through single-point research and deploys the large model to the cluster for initial training. Issues such as data throughput and data alignment may arise in the early stage, but these are usually discovered and resolved at the beginning of the model launch. For example, data alignment issues can lead to inconsistent gradient calculations on different nodes, thereby affecting training effectiveness. However, these issues are quickly identified because they occur at the beginning of the model launch, and training continues.

Driving While Fixing the Car—Dealing with Catastrophic Issues

During the development process, the large model team may encounter "catastrophic issues," such as hidden small bugs that cause the cluster to frequently report errors. These issues need to be resolved while training continues, essentially "fixing while driving." For example, a hidden small bug may cause the cluster to frequently crash at 40% of the training process. This requires the team to quickly locate and fix the problem while maintaining the continuity of training.

Model Capability Acceleration Stage

After the catastrophic "driving while fixing" process, the large model team accumulates rich full-stack technology, making the replication of the next version of the large model more efficient. For example, DeepSeek's process from V1 to V2 to V3 to R1 sees accelerating model capability improvements. OpenAI's model capability increased by about 10 times from GPT-4 to GPT-4.5, achieving "difficult-to-quantify but all-around enhanced intelligence." In this stage, the team usually optimizes the model architecture and training algorithms to improve training efficiency and model performance.

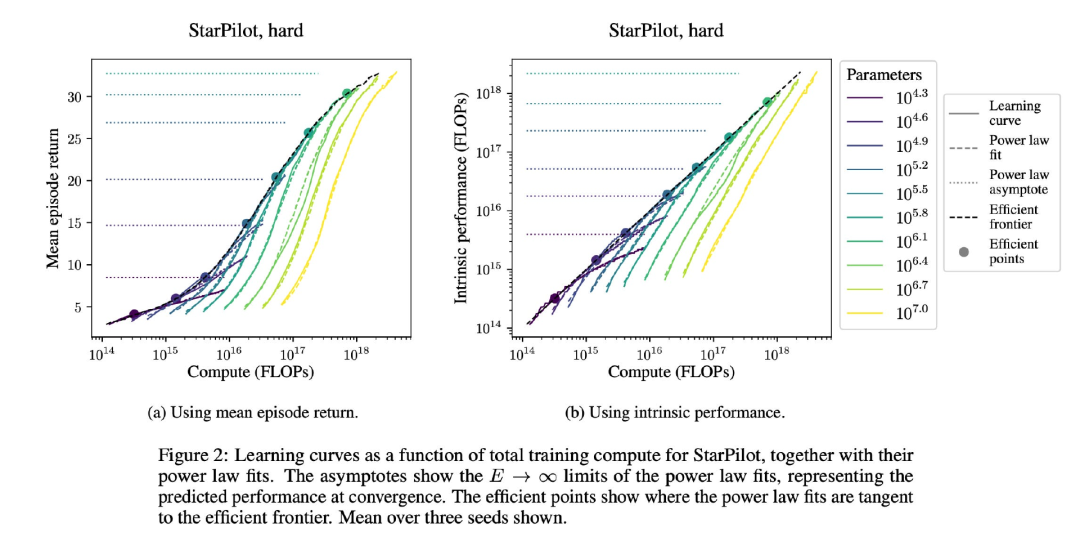

Focusing on Performance and Efficiency Improvement

After the model capability acceleration period, it is found that the Scaling Law still plays an important role. To achieve the next 10 times or even 100 times performance improvement, the key lies in data efficiency, that is, the ability to use more computational power to learn more knowledge from the same amount of data.

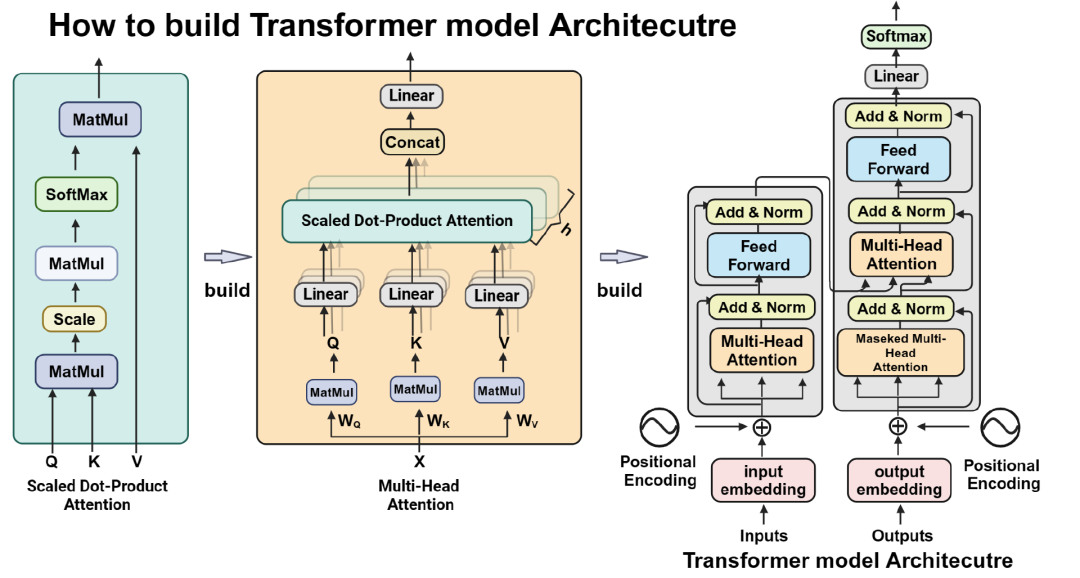

Challenges and Opportunities of the Transformer Architecture

The Transformer architecture is widely used because it is very efficient in utilizing data, absorbing and compressing information, and achieving generalization. Its biggest feature is the ability to efficiently absorb information using computational resources. However, its potential bottlenecks are also gradually emerging:

The depth at which the Transformer extracts useful information from data is limited. When computational power grows rapidly while data growth is relatively slow, data becomes a bottleneck for this standard model. This requires algorithmic innovation to develop methods that can use more computational power to learn more knowledge from the same amount of data.

A major advantage of the Transformer architecture is its performance in data efficiency. It can effectively capture long-range dependencies through the Self-Attention mechanism, thus performing well when handling large-scale datasets. However, as the model size increases, improving data efficiency becomes increasingly difficult. For example, a model with 100 billion parameters may require millions of training samples to achieve optimal performance, while the growth rate of data often cannot keep up with the growth of model size.

Monitoring the loss curve during training is key to ensuring the normal operation of the model. By real-time monitoring of the Loss curve, abnormal trends in the training process can be detected in time, and corresponding optimization measures can be taken. For example, if the loss curve fluctuates during training, it may be due to uneven distribution of weight data among multiple cards, causing overflow during computation aggregation. This issue is actually quite difficult to identify at the infra level, as it appears algorithmically sound.

In addition to the above, we also need to continuously optimize the entire large model training system to make up for the co-design that the algorithm team and Infra team failed to complete before training started. For example, closely monitoring various statistical indicators during the training process to ensure that no unexpected abnormal situations occur.

Moreover, in addition to the growth of data and computational power, algorithmic improvements in the Transformer architecture also have a cumulative effect on performance. Each 10% or 20% improvement in algorithm performance, when Overlaying on data efficiency will bring significant improvement effects. At present, OpenAI and DeepSeek are entering a new stage of AI research and will begin to accumulate achievements in data efficiency.

The New Era of Large Model Training and the Evolution of Hardware Needs

In fact, many of the problems encountered in large-scale parallel clusters, such as ten-thousand-card and hundred-thousand-card AI clusters, are not new but have existed from the beginning. They just become catastrophic as the scale expands.

Pretraining and Reinforcement Learning Data Conflict: Pretraining datasets typically pursue breadth and diversity. However, when it comes to model reinforcement learning, i.e., LLM+RL, it is difficult to maintain the breadth of the dataset while providing a clear reward signal and a good training environment for the model. Pretraining is essentially a data compression process aimed at discovering connections between different things. It focuses more on analogical and abstract learning. Inference, on the other hand, is a specific capability that requires careful thinking and can help solve a variety of problems. Through cross-domain data compression, pretraining models can grasp higher-level abstract knowledge.

Scaling Law Has Not Reached Theoretical Limits: From the perspective of machine learning and algorithm development, we have not yet reached the theoretical upper limits of the Scaling Law and the Transformer architecture. Different generations of model architectures (or models of different parameter scales) are essentially the result of model specification evolution. For example, we cannot simply use the architecture and data volume of a 30B model to train a 160B model. When computational demands exceed the capacity of a single cluster, it is necessary to turn to multi-cluster training architectures, which is why there is now a lot of AIInfra research on heterogeneous scenarios.

Building a cluster system of ten thousand or even a hundred thousand cards is not the ultimate goal. The real core lies in its actual output value—whether it can train an excellent large model. OpenAI has already crossed the four stages of large model training and entered a new era of computational power. For teams like OpenAI and DeepSeek, computational resources are no longer the main bottleneck. This shift has profound implications for the industry and the companies themselves. After all, since 2022, with the advent of the era of a hundred models, most algorithm and model vendors have been in a long-term environment of limited computational resources.

So, at the overall level of a ten-thousand-card cluster, what limits large-scale model training? Is it the chip, processor, memory, network, or power supply? Since many domestic teams are in the transition phase, what are their needs for chips, processors, etc.?

The system-level bottlenecks in large model training are not caused by a single factor but are comprehensive challenges involving computation, storage, communication, energy, and more. In other words, for large models, AIInfra plays a crucial role.

Computation and Storage: Balancing Chips, Memory, and Bandwidth

The performance of computing chips (such as GPUs/TPUs) directly affects training efficiency, including computational density (TFLOPS), memory capacity (such as HBM bandwidth), and high-speed interconnect capabilities (NVLink/RDMA). For example, training a model with hundreds of billions of parameters requires TB-level memory to store parameters and intermediate states. Insufficient memory bandwidth can lead to idle computing units, creating a "memory wall." Moreover, as model size increases, the computing capacity of a single cluster may not meet the demand, forcing teams to turn to multi-cluster architectures. At this point, state synchronization and communication overhead become new bottlenecks.

Optimizing the memory system is equally crucial. In addition to memory, the hierarchical collaboration of host memory (DRAM) and storage (SSD/HDD) also affects data throughput. For example, efficient memory management is needed for saving and loading checkpoints during training, and storage I/O delays can slow down the entire process. Therefore, modern training systems require bandwidth matching between memory, memory, and storage to avoid any one link becoming a weak point.

Communication and Networking: The Efficiency of Cross-Node Collaboration

In large-scale distributed training, network communication is often one of the main bottlenecks. Collective operations such as AllReduce require efficient cross-node data transfer, and low-bandwidth or high-latency networks (such as traditional Ethernet) can significantly increase synchronization time. Currently, 800Gbps RDMA networks are becoming the standard for supercomputing clusters, but topology design (such as Dragonfly, Fat-Tree) and communication scheduling algorithms (such as topology-aware AllReduce) still need optimization to avoid network congestion.

Moreover, multi-cluster training introduces more complex communication issues. For example, cross-data center training may be limited by the bandwidth of the wide area network (WAN), and the choice of consistency protocols (such as the synchronization strategy of the parameter server) affects training stability and speed. Therefore, when domestic teams build ten-thousand-card clusters, they need not only high-speed interconnect hardware but also software-level communication optimization, such as gradient compression and asynchronous training.

Energy and Cooling: The Sustainability of High-Density Computing

As computational density increases, power supply and cooling become significant limiting factors. The power of a single cabinet has increased from the traditional 10kW to over 30kW, and the efficiency of air cooling is approaching its limit. Liquid cooling technologies (such as cold plates and immersion) are gradually becoming popular. This involves not only hardware modifications (such as power supply redundancy and cooling pipe design) but also software-level power management, such as dynamic voltage frequency scaling (DVFS) and task scheduling optimization, to reduce overall energy consumption.

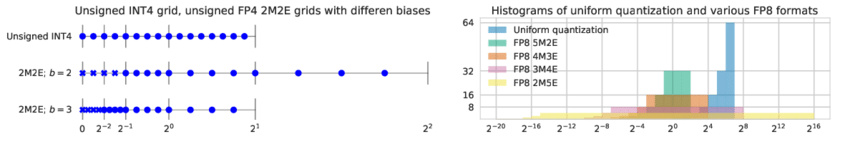

Under limited computational power, techniques such as low-precision training (FP8/BF16) and dynamic sparsity can improve hardware utilization. The stability of ten-thousand-card clusters requires hardware-level fault tolerance (such as automatic recovery) and global memory consistency (CXL technology). Currently, the industry is exploring new technologies such as 3D packaging, compute-in-memory, and optical interconnects to break through traditional architectural limitations.

The bottleneck of large model training is essentially a system-level challenge that requires full-stack optimization from chips, networks, energy to software stacks. The current computational infrastructure plays a key role in large model training, but we are still far from the ideal large model training system.