Microsoft has recently introduced a groundbreaking approach to Retrieval-Augmented Generation (RAG) systems, named LazyGraphRAG, which promises to deliver the efficiency and cost-effectiveness required for wide-scale adoption of graph-based RAG functionalities. This new system not only overcomes the limitations of existing tools but also amalgamates their strengths, significantly reducing costs while maintaining high performance.

LazyGraphRAG represents a new era in RAG evolution, with costs reduced to just 0.1% of GraphRAG's expenses. This novel RAG scheme has been claimed by Microsoft researchers to achieve "natural scalability" in terms of both cost and quality, capable of demonstrating robust performance within the desired cost-quality spectrum. It also reduces the overall global search costs for datasets and enhances the efficiency of local searches.

GraphRAG, a hybrid term combining "Graph" and RAG (Retrieval-Augmented Generation), leverages text extraction, network analysis, and large model prompting/summarization within a single end-to-end system to deeply understand word-based dataset content. Since its initial open-source release in July, GraphRAG has garnered significant attention, amassing 19.7k stars on GitHub, making it one of the most popular RAG frameworks currently available.

In the realm of artificial intelligence, RAG systems are crucial for document summarization, knowledge extraction, and exploratory data analysis tasks. However, one of the primary issues with current systems is the trade-off between cost and quality. Traditional methods, such as vector-based RAG, perform well in localized tasks, like retrieving direct answers from specific text fragments. Yet, they struggle with global queries that require a comprehensive understanding of the dataset. Graph-supported RAG systems, on the other hand, can better address these broader issues by utilizing relationships within data structures. However, the high indexing costs associated with graph RAG systems have made them less palatable for cost-sensitive scenarios. Balancing scalability, affordability, and quality remains a critical bottleneck in existing technologies.

LazyGraphRAG emerges as a new system that not only surmounts the limitations of current tools but also integrates their advantages. By eliminating the need for high-cost initial data summarization, LazyGraphRAG reduces indexing costs to a level close to that of vector RAG.

Microsoft is set to release an open-source version of LazyGraphRAG soon, which will be incorporated into the GraphRAG library. The open-source link can be found at: https://github.com/microsoft/graphrag.

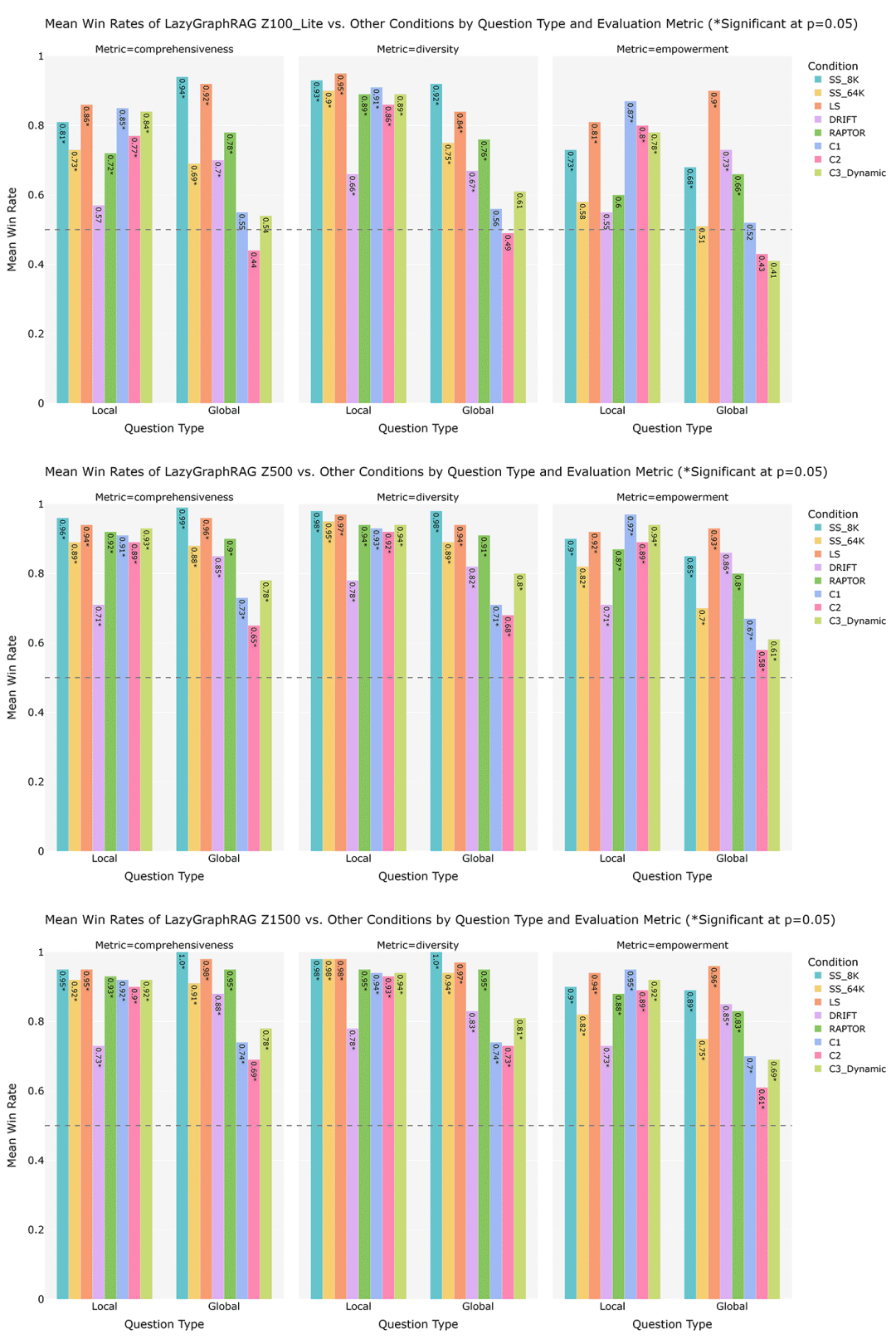

LazyGraphRAG stands as a breakthrough in the field of retrieval-enhanced generation, with Microsoft dubbing it a "low-cost solution suitable for all scenarios." To assess LazyGraphRAG's performance, Microsoft designed three different budget scenarios to observe its performance under various conditions.

– In the lowest budget scenario (100 relevance tests, using a low-cost LLM, with costs equivalent to SS_8K), LazyGraphRAG significantly outperformed all conditions in both local and global queries, only slightly lagging behind GraphRAG's global search condition in global queries.

– In the medium budget scenario (500 relevance tests, using a more advanced LLM, with query costs at 4% of C2), LazyGraphRAG comprehensively surpassed all comparative conditions in both local and global queries.

– In the high budget scenario (1,500 relevance tests), LazyGraphRAG's win rate further increased, demonstrating its excellent scalability in balancing cost and quality.

LazyGraphRAG combines VectorRAG and GraphRAG, "overcoming the individual limitations of both." Microsoft states, "LazyGraphRAG shows that a single, flexible query mechanism has the potential to greatly surpass various specialized query mechanisms within the local-global query range, while eliminating the upfront data aggregation costs of large language models."

The system's extremely fast and nearly cost-free indexing capabilities make LazyGraphRAG an ideal choice for pathogenic queries, exploratory analysis, and streaming data use cases. Moreover, it can balance answer quality as the relevance test budget increases, making it a crucial tool for benchmarking against other RAG methods.

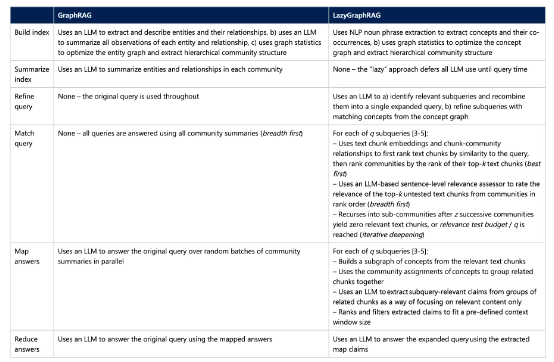

Vector RAG, also known as semantic search, is a "best-first search form that uses similarity to the query to select the best-matching source text blocks." However, semantic search has a significant drawback in that it cannot meet the breadth of dataset considerations required for global queries.

Researchers note that GraphRAG global search is a breadth-first search that uses the community structure of source text entities to ensure that the dataset breadth is fully considered in query results. The issue lies in its inability to identify the best community needed for local queries.

GraphRAG is more adept at providing answers that emphasize breadth, suitable for questions like "What is the core theme?" or "What characteristics of X are reflected in this information?" In contrast, Vector RAG is better suited for local query scenarios where the answer structure is similar to the question, such as questions involving "who, what, when, where," which is also the origin of the "best-first" algorithm form.

The distinction between GraphRAG and LazyGraphRAG lies in LazyGraphRAG's iterative deepening approach that dynamically combines best-first and breadth-first searches—initially searching to a limited depth before iteratively delving deeper into the dataset.

Microsoft states that LazyGraphRAG's data indexing costs are on par with vector RAG and only 0.1% of the full GraphRAG costs. "Under the same configuration, LazyGraphRAG also demonstrates answer quality comparable to GraphRAG global search, but the cost of global queries is reduced to less than 1/700. With only 4% of the query cost of GraphRAG global search, LazyGraphRAG can significantly outperform all competitive methods in both local and global aspects."

Microsoft explains, "Compared to the full GraphRAG global search mechanism, this method is indeed 'lazy' to some extent, as it defers the use of large models, thereby greatly improving answer generation efficiency. Its overall performance can be scaled through a primary parameter (relevance test budget), which consistently manages the trade-off between cost and quality."

Thus, this diligent RAG method has a "lazy" side, as it only applies large language models (LLMs) when absolutely necessary, optimizing their use. It does not preprocess the entire dataset beforehand but instead conducts initial relevance tests, analyzing smaller data subsets to identify potential relevant information.

After completing these tests, the system then employs resource-intensive large language models for more in-depth analysis. Although this approach differs from the current personal style, it reminds us of a quote by Bill Gates when he led Microsoft: "I would prefer to hire a lazy person to do a hard job, because a lazy person will find an easier way to do it."