Claude MCP has suddenly gained immense popularity, becoming the "universal plug" in the AI Agent circle, directly boosting Cursor workflow efficiency by 10 times. Recently, MCP (Model Context Protocol), an open standard protocol launched by Claude's parent company, Anthropic, in November last year, has been garnering widespread attention. Its goal is to provide a standardized way for large language models to connect with external data sources, tools, and services.

Although it didn't receive much attention after its release, the situation has changed recently. Now, everyone is passionately discussing MCP servers on X, believing it will become a disruptive innovation in AI applications.

The sudden explosion of MCP

Last week, Windsurf announced that they had connected MCP servers to their software, enabling direct interaction with platforms like GitHub, Slack, and Figma, adding more practical tools to Cascade and creating a brand-new workflow.

Many others have tried the combination of Cursor and MCP.

Some have tried integrating MCP with Google Docs through Cursor in less than 2 minutes and used this feature to create product requirement documents (PRDs).

Others have used Cursor and MCP technology to achieve an automated process, allowing companies to quickly respond to customer needs and automatically complete development.

Specifically, if a customer says on Slack, "We need a new feature!", programmers used to manually check Slack, write code themselves, and then submit the code. Now, when a customer makes a feature request, Cursor automatically reads the Slack request and builds the required feature, then automatically creates a Pull Request (code submission request) and submits it to the development team for review and merging. This entire process is automated, greatly improving efficiency and allowing engineers to focus on longer-term tasks.

Some bloggers even said that MCP has reshaped their Cursor workflow, increasing efficiency by 10 times.

These cases also reflect why MCP has become popular.

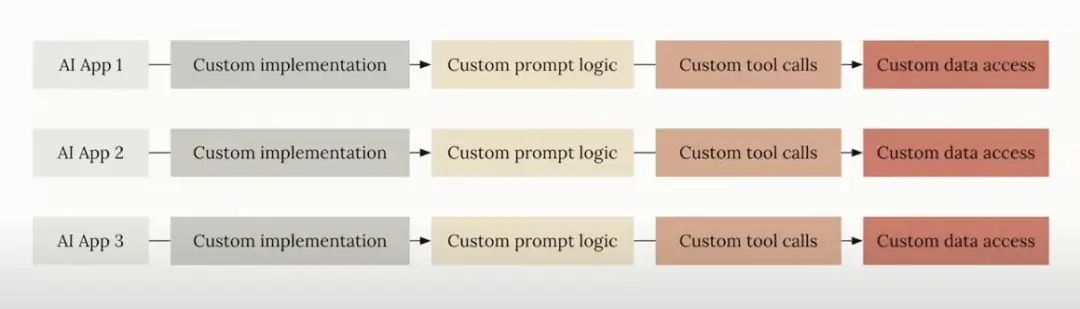

Although new AI applications have emerged in the past, we generally feel that they are mostly independent new services that lack effective integration with the systems we use daily. That is to say, the integration of AI models with existing systems has been slow. We currently cannot use a single AI application to perform online searches, send messages, update databases, etc. These functions are not difficult to achieve individually, but it is quite troublesome to integrate them all into a system.

Taking daily development as an example, imagine in an IDE, we can use the IDE's AI to complete the following tasks: query existing data in the local database to assist development; use AI to search GitHub Issues to determine whether a problem is a known bug; and use instant messaging software to complete coding requirements and submit PRs…

Now, these functions are becoming a reality through MCP. One reason for the slow progress of AI integration with existing services is the lack of an open, universal protocol standard on the technical side. MCP is the solution to fill this gap. With MCP, developers can integrate more features according to their needs and spend very little time, improving the efficiency of Cursor and other IDE workflows.

Is MCP the "universal plug" in the AI world?

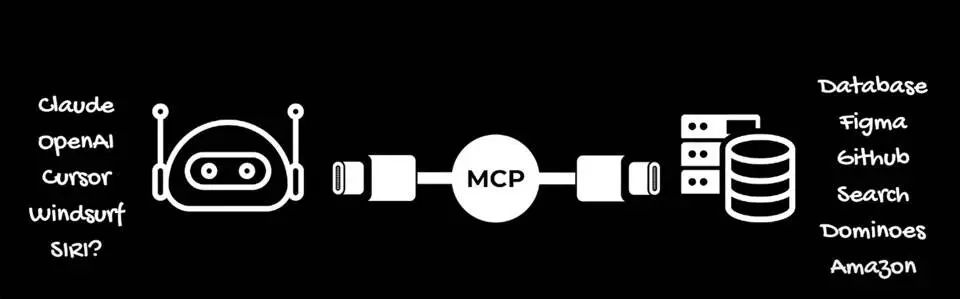

Anthropic officially stated that MCP was inspired by Microsoft's LSP (Language Service Protocol), and they compare MCP to the USB-C interface of AI applications, hoping to connect various different services through it.

Imagine if we could connect any agent to any service, website, or application, the way the internet works would be completely changed. Simply put, they hope MCP will become the "universal plug" in the AI world.

In the traditional API method, just like various electrical appliances require different plugs, if you want an AI application to connect to different data sources (such as websites, databases), you have to create a separate connection method for each type of data source, which is very troublesome. This leads to "integration fragmentation," just like a bunch of different chargers at home, messy and difficult to maintain.

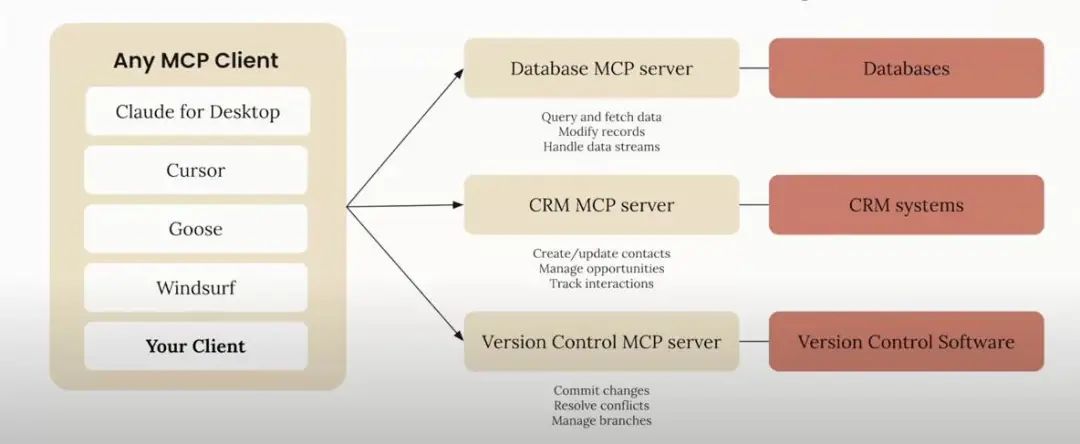

MCP, like the USB-C interface, provides a unified standard. With MCP, AI applications can connect to various data sources and tools in the same way. In this way, developers save a lot of trouble and don't have to reinvent the wheel, greatly improving development efficiency.

In addition, MCP supports bidirectional communication, making AI interactions more intelligent. Traditional API communication is usually unidirectional and lacks real dialogue capabilities. MCP supports bidirectional communication, and AI assistants can not only obtain data but also perform operations, update information, and maintain context in multiple interactions, enhancing the level of intelligence.

MCP also has good scalability and maintainability. In the API field, each new integration is like reinventing the wheel, increasing development and maintenance costs. MCP uses a standard protocol, and developers only need to integrate once to reuse it in multiple AI applications, greatly reducing repetitive work and improving development efficiency.

At the same time, MCP provides a unified data exchange framework to help enterprises implement consistent security policies and simplify compliance processes. In addition, MCP empowers AI agents to show higher autonomy when performing complex tasks. Restricted by traditional API mechanisms, AI agents are often limited when performing tasks, but MCP allows AI agents to access information in real-time, manage resources, and seamlessly connect multiple platforms, enhancing execution capabilities and the possibility of autonomous decision-making.

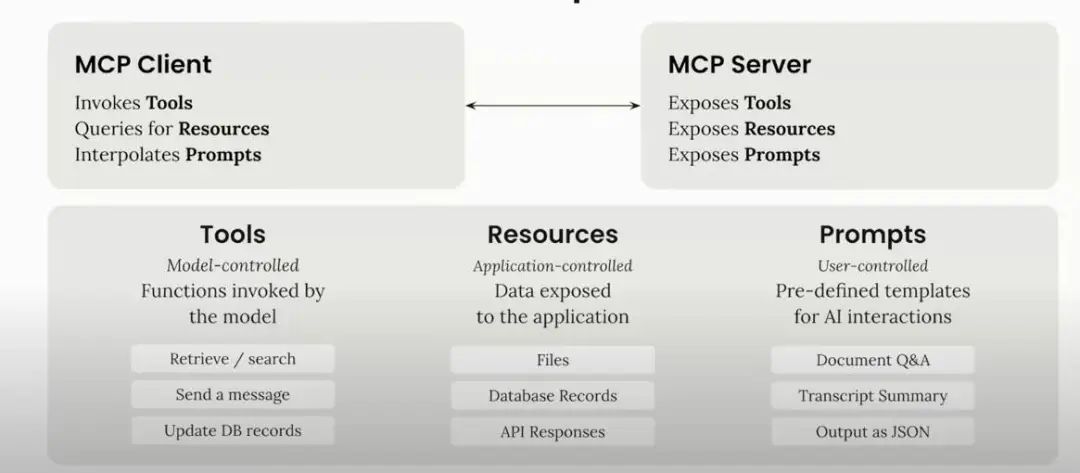

In an MCP architecture, the MCP client is responsible for calling tools, querying resources, and filling in prompt information to provide context for the model. The server side exposes these tools, resources, and prompt information for the client to use. The types of tools include reading data, sending data, updating databases or files, etc., covering almost all operations. Resources are data provided by the server, and the application controls how to use them. The server can provide images, text files, JSON files, etc., and the application uses these resources according to its needs.

According to data released by Anthropic, the adoption of MCP is growing, with more than 1,100 community-built servers and official integrations available for use. Many are built and open-sourced by individual developers, and some are built by companies themselves: https://github.com/modelcontextprotocol/servers

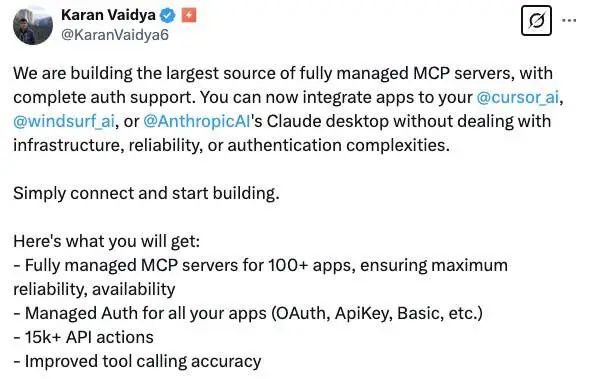

For example, a few days ago, Composio MCP announced that they are building the world's largest fully managed MCP server source, which can connect Claude, Cursor, Windsurf, support more than 100 applications and more than 15,000 API operations, and there is no need for complex identity verification settings. What's more attractive is that all of this does not require writing any Python code.