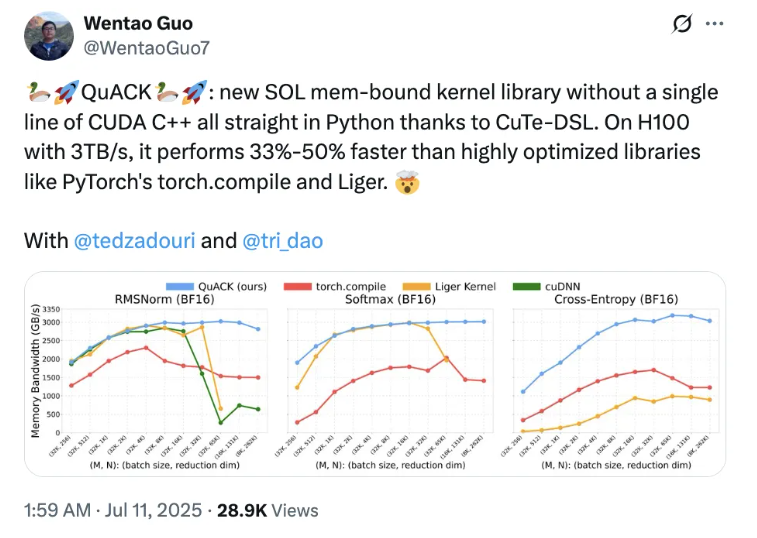

A groundbreaking new kernel library called QuACK has been released by Tri Dao, a co-author of Flash Attention, in collaboration with two PhD students from Princeton University. What sets QuACK apart is the fact that it was developed entirely in Python and CuTe-DSL, without any involvement of CUDA C++ code. This innovative approach not only breaks away from traditional programming frameworks but also achieves a remarkable 33%-50% speedup on powerful H100 GPUs compared to popular libraries like torch.compile and Liger in PyTorch.

Tri Dao believes that achieving efficient operation of memory-intensive kernels is not a secret, but rather depends on precise handling of some key details. He emphasizes the importance of understanding the thread and memory hierarchy of modern accelerators. As GPU performance optimization continues to advance, developers can now leverage CuTe-DSL, a Python-based domain-specific language, to significantly boost performance in a more user-friendly environment.

This groundbreaking achievement has quickly garnered attention from industry experts. Vijay, a senior architect at NVIDIA's CUTLASS team, expressed his admiration for the design of CuTe-DSL, which enables experts like Tri Dao to easily achieve efficient GPU operation. He also revealed that more exciting content related to this topic will be released this year. Horace He, a member of the PyTorch team, also showed great interest in this innovation, particularly noting its significant advantages in processing long sequences.

To benefit more developers, the authors of QuACK have written a detailed tutorial explaining the specific steps and code for implementation, making it easy for others to directly use. The article emphasizes that achieving efficient operation during GPU model training and inference requires optimizing both compute-intensive and memory-intensive kernels. Matrix multiplication and attention mechanisms have already been optimized in previous work, so this study focuses on memory-intensive kernels.

The authors explain that memory-intensive kernels have lower arithmetic intensity, so their throughput is more dependent on the amount of data transferred per second. By ingeniously leveraging the GPU's memory hierarchy and hardware features, the authors have successfully boosted the performance of memory-intensive kernels to nearly "lightning-fast" levels.