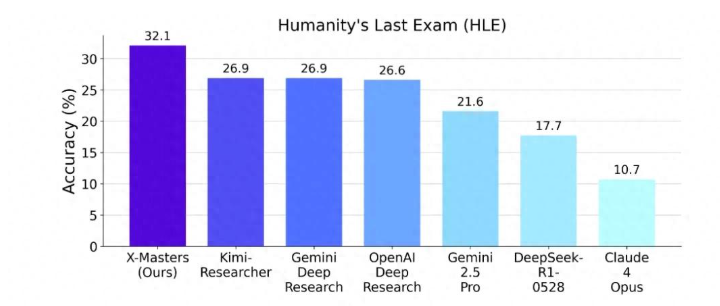

In the fiercely competitive global landscape of artificial intelligence, a groundbreaking collaboration between Shanghai Jiao Tong University and Deep Potential Technology has shattered records in the Human-Level Examination (HLE), a benchmarking test known for its extreme difficulty. The team achieved a remarkable score of 32.1, the first time surpassing the 30-point threshold. This milestone surpasses the previous high score of 26.9, set by Kimi-Research and Gemini Deep Research.

This research introduces X-Master, an augmented reasoning intelligence tool, and the multi-agent workflow system X-Masters. Not only does this technological innovation excel in performance, but the team has also open-sourced it, fostering collaboration and progress in the AI field.

At the heart of X-Master lies the emulation of human researchers' dynamic problem-solving processes, enabling seamless transitions between internal reasoning and external tool utilization. When faced with unsolvable problems, X-Master translates action plans into code, executes them through various tools such as NumPy and SciPy, and integrates the results back into the intelligence's knowledge base. This forms an efficient feedback loop, continuously refining the reasoning process.

The design of X-Masters is more intricate, employing a decentralized-stacked multi-agent workflow that enhances the breadth and depth of reasoning. During the decentralized phase, multiple solvers work in parallel to generate diverse solutions, while critic agents assess and refine these proposals. Subsequently, a rewriter agent consolidates all outputs into an improved solution, with a selector agent ultimately choosing the best answer.

In this test, X-Masters particularly excelled in the biology/medicine category, outperforming existing intelligent systems and demonstrating its formidable capabilities in tackling complex challenges.

Initiated early this year by the AI Safety Center and Scale AI, the Human-Level Examination aims to evaluate the intelligence level of AI systems. The questions, sourced from over 1,000 scholars across more than 500 institutions, are notoriously difficult, making this achievement a testament to the cutting-edge advancements in AI.