Qwen VLo, the latest multimodal large model, has been officially released, marking a significant advancement in image content understanding and generation. This groundbreaking model offers users an entirely new visual creation experience, taking image generation to new heights.

Building on the strengths of the original Qwen-VL series, Qwen VLo has undergone a comprehensive upgrade. The model not only accurately "understands" the world but also generates high-quality recreations based on its understanding, truly bridging the gap between perception and generation. Users can now directly experience this new model on the Qwen Chat platform (chat.qwen.ai).

What sets Qwen VLo apart is its progressive generation approach. The model adopts a step-by-step construction strategy from left to right and top to bottom during image generation. It continuously optimizes and adjusts the predicted content throughout the process, ensuring harmony and consistency in the final result. This generation mechanism not only enhances visual effects but also provides users with a more flexible and controllable creative process.

Qwen VLo demonstrates formidable capabilities in content understanding and recreation. Compared to previous multimodal models, Qwen VLo maintains better semantic consistency during the generation process. It avoids issues like misidentifying cars as other objects or failing to retain key structural features of the original image. For instance, when a user uploads a car photo and requests a color change, Qwen VLo accurately identifies the car model, preserves original structural features, and seamlessly transitions the color style. The generated result meets expectations while maintaining a sense of realism.

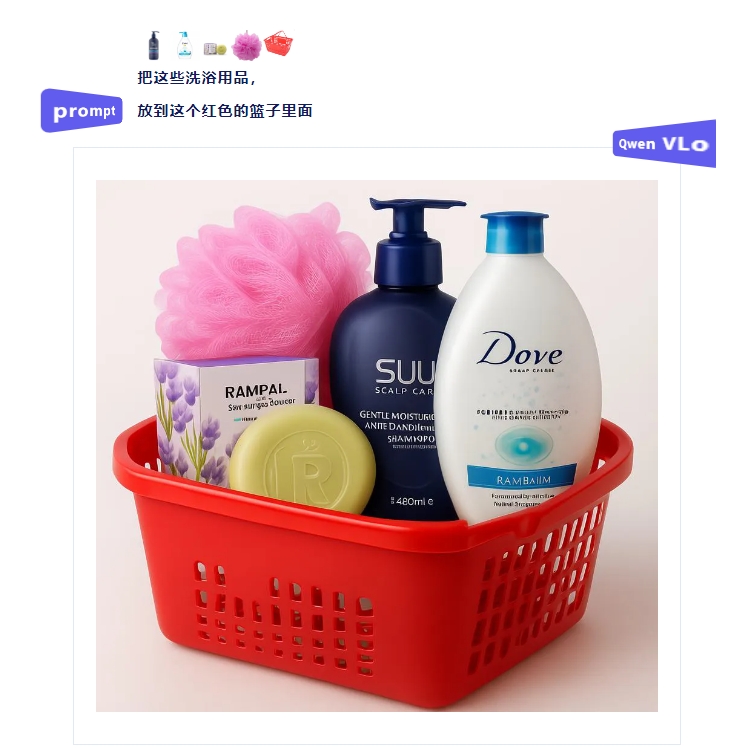

In addition, Qwen VLo supports open instruction editing and modification. Users can propose various creative instructions through natural language, such as changing the painting style, adding elements, or adjusting the background. The model can flexibly respond to these instructions and generate results that meet user expectations. Whether it's artistic style migration, scene reconstruction, or detail modification, Qwen VLo can easily handle them.

It's worth mentioning that Qwen VLo also has multi-language instruction support capabilities. The model supports instructions in multiple languages, including Chinese and English, providing a unified and convenient interaction experience for users worldwide. Regardless of the language used, users only need to simply describe their needs, and the model can quickly understand and output the desired results.

In practical applications, Qwen VLo demonstrates diverse functionalities. It can directly generate and modify images, such as replacing backgrounds, adding subjects, or performing style migrations. The model can also complete significant modifications based on open instructions, including detection and segmentation visual perception tasks. Moreover, Qwen VLo supports input understanding and generation of multiple images, as well as image detection and annotation functions.

Apart from simultaneous text and image input, Qwen VLo also supports direct text-to-image generation, including general images and Chinese and English posters. The model employs dynamic resolution training, supporting image generation with arbitrary resolutions and aspect ratios. This enables users to generate image content that adapts to different scenarios based on actual needs.

Currently, Qwen VLo is still in the preview stage and has shown formidable capabilities. However, there are still some shortcomings, such as potential inconsistencies with reality or the original image during the generation process. The development team has stated that they will continue to iterate and improve the model's performance and stability.

Experience Qwen VLo at chat.qwen.ai.