Ant Group's technology team has recently announced the official open-sourcing of its lightweight inference model, Ring-lite, marking a significant milestone in the realm of AI-powered inference. The model has achieved remarkable results across various benchmarks, setting a new state-of-the-art (SOTA) for lightweight inference models and reaffirming the potential of the Mixture of Experts (MoE) architecture.

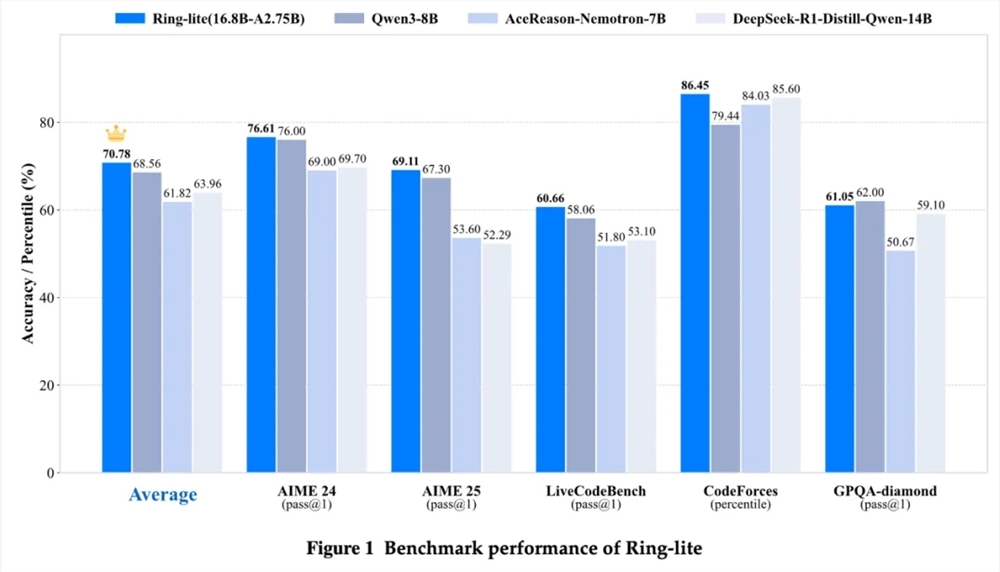

Building on the foundation of Ant Group's previously released Ling-lite-1.5, Ring-lite employs the MoE architecture with a total parameter count of 16.8 billion, yet with only 2.75 billion active parameters. This innovative approach has allowed Ring-lite to excel in AIME24/25, LiveCodeBench, CodeForce, GPQA-diamond, and other inference benchmarks, matching the performance of Dense models with three times the number of active parameters and under 10 billion parameters.

The Ring-lite team has introduced several groundbreaking innovations in the technical execution. The proprietary C3PO reinforcement learning training method effectively addresses the optimization challenges posed by fluctuating response lengths during RL training, significantly improving training instability and throughput fluctuations. Additionally, the team explored the optimal training balance between Long-CoT SFT and RL, proposing an entropy loss-based approach to balance training effectiveness and sample efficiency, further enhancing model performance.

Ring-lite also confronts the challenge of joint training across multiple domains, systematically validating the strengths and limitations of mixed and phased training. The model has achieved synergistic gains in mathematics, coding, and scientific domains, demonstrating exceptional performance in complex reasoning tasks, particularly in mathematical reasoning and programming competitions.

To assess the practical application of Ring-lite, the team conducted tests on high school mathematics and physics problems. The results revealed that Ring-lite could achieve a score of around 130 out of 150 in the national mathematics exam, an impressive performance.

Ant Group's technology team has pledged that the open-sourcing of Ring-lite will not only include model weights and training code but will also gradually release all training datasets, hyperparameter configurations, and even experimental records. This could be the first time a lightweight MoE inference model achieves full transparency across the entire chain, providing invaluable reference resources for researchers in the field.

For more information and access to Ring-lite, please visit the following links:

GitHub: https://github.com/inclusionAI/Ring

Hugging Face: https://huggingface.co/inclusionAI/Ring-lite

ModelScope: https://modelscope.cn/models/inclusionAI/Ring-lite