The curtains have drawn on the 2025 Gaokao, but the discussion around AI's mathematical prowess is just beginning. Amidst the academic fervor, Quark, a homegrown AI, has emerged as a new standard in AI mathematical ability, outperforming its competitors in a rigorous test.

In a public evaluation that mirrored the Gaokao's intensity, models such as Quark, DouBao, YuanBao, and ChatGPT were pitted against the 2025 National Mathematics paper. Disconnected from the internet and operating solely in deep thought mode, these AI contenders were tested under identical conditions.

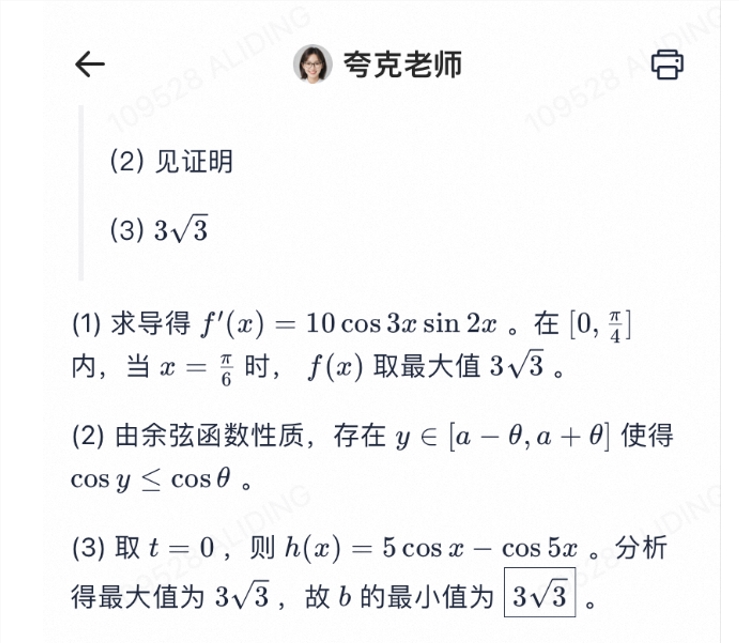

Quark soared to the top of the leaderboard in the assessment conducted by Blue Whale Finance, securing an impressive 145 points. Its accuracy in multiple-choice and fill-in-the-blank questions was a standout, hitting a 93% success rate and claiming the top spot. However, a common challenge was faced by all AI products in the multiple-choice section, where the sixth question remained unanswered correctly. It was discovered that the AIs misinterpreted vector coordinates and arrow directions in the diagrams, leading to errors.

In the "Four Woods Relativity" evaluation, Quark once again claimed the summit with a score of 146. It also led in terms of speed, with DouBao following closely behind. For example, Quark could complete a problem-solving question within four minutes, while other products averaged around six minutes.

The backbone of Quark's problem-solving prowess is the "Quark Learning Lingzhi Model." Built upon the foundation of Universal Questions, this model leverages a vast library of learning materials and post-training capabilities, excelling particularly in tackling complex problems in the sciences, offering users a new, heuristic learning experience.