On June 6th, the tech world witnessed the launch of a groundbreaking AI model series by MeiBi Intelligence – the MiniCPM4.0. This series has been dubbed the "most imaginative pocket rocket in history," setting a new benchmark in both performance and technological innovation.

The MiniCPM4.0 series boasts two powerhouse models: the 8B Lightning Sparse version, which revolutionizes efficiency with its innovative sparse architecture, and the nimble 0.5B version, acclaimed as the "strongest mini pocket rocket." Both models excel in speed, efficiency, performance, and practical applications.

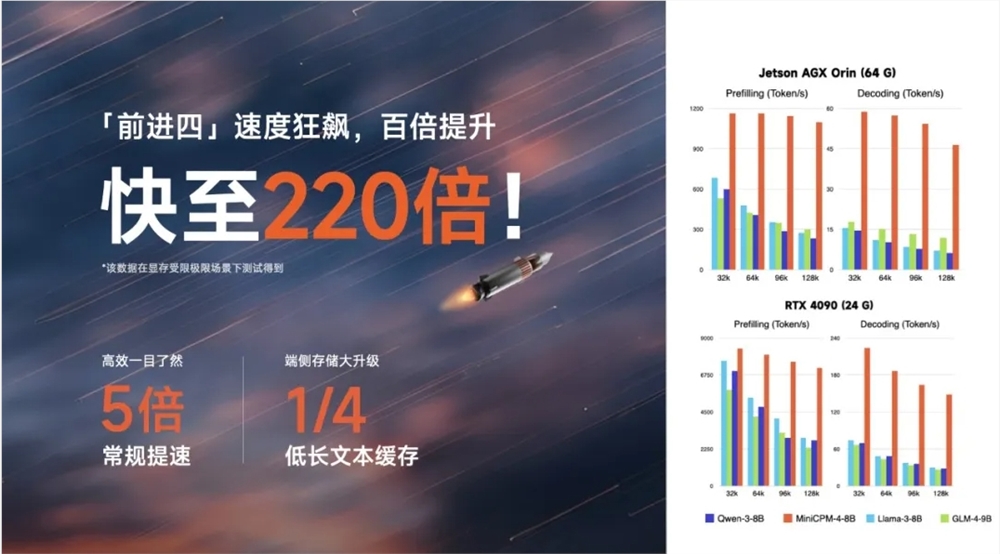

In terms of speed, the MiniCPM4.0 achieves an astonishing 220 times faster performance under extreme conditions and 5 times faster under normal conditions. This breakthrough is attributed to the multi-layered acceleration of system-level sparsity innovations. The model employs an efficient dual-frequency gear-shifting technology, which automatically switches between sparse and dense attention mechanisms based on text length. This ensures rapid and efficient processing of long texts while significantly reducing on-device storage requirements. Compared to similar models like Qwen3-8B, the MiniCPM4.0 requires only a quarter of the cache storage space.

Efficiency-wise, the MiniCPM4.0 delivers the industry's first fully open-source, system-level context sparsity innovation, achieving extreme acceleration with an incredibly high sparsity of 5%. It integrates proprietary innovations, optimizing from the architectural, systemic, inference, and data layers to ensure efficient hardware and software sparsity at the system level.

Performance-wise, the MiniCPM4.0 continues the tradition of "punching above its weight." The 0.5B version achieves half the parameters and double the performance with only 2.7% of the training cost. Meanwhile, the 8B sparse version outperforms Qwen3 and Gemma312B with 22% of the training cost, solidifying its leading position in the on-device domain.

In practical applications, the MiniCPM4.0 demonstrates formidable capabilities. By leveraging its proprietary CPM.cu ultra-fast on-device inference framework, combined with innovative model compression, quantization, and on-device deployment frameworks, it achieves a 90% reduction in model size while significantly enhancing speed. This ensures a seamless on-device inference experience from start to finish.

The model has been successfully adapted to mainstream chips from Intel, Qualcomm, MTK, and Huawei Ascend, and has been deployed on multiple open-source frameworks, further expanding its application potential.

For more information and to explore the MiniCPM4.0 series, visit the model collection at ModelScope and the GitHub repository for the project.