In the rapidly evolving field of AI, extracting and reasoning about critical information from visual languages such as images, tables, and design drafts has long been a significant challenge. Traditional Retrieval-Augmented Generation (RAG) methods struggle with visually rich information, primarily due to their inability to effectively handle visual content like images and charts. Furthermore, existing visual RAG methods are limited by a fixed retrieval-generation process, hindering their ability to fully exploit the wealth of knowledge embedded within visual information.

To address these challenges, Tongyi Lab's Natural Language Intelligence Team has recently released and open-sourced VRAG-RL, a visual perception-driven multimodal RAG inference framework. This groundbreaking framework introduces a systematic innovation in three key dimensions: reinforcement learning-enabled multimodal agent training, visual perception mechanism design, and retrieval-reasoning collaborative optimization.

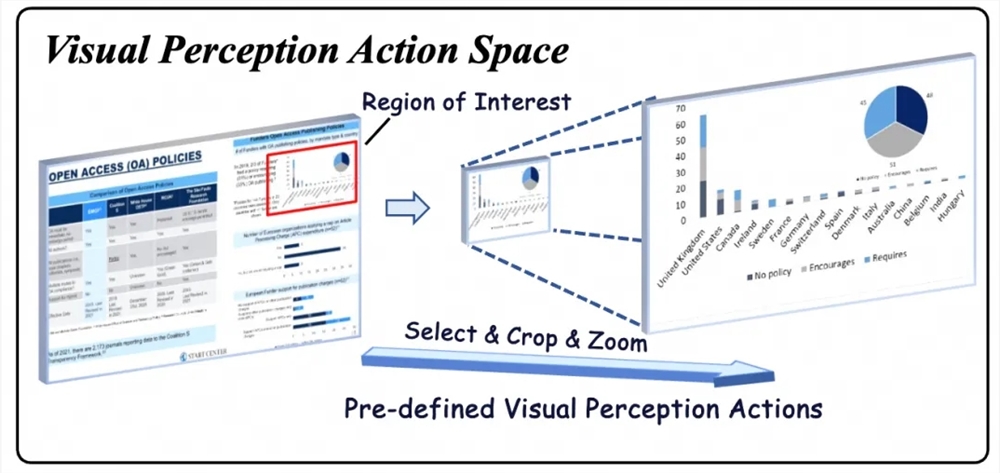

VRAG-RL incorporates a diverse range of visual perception actions, such as region selection, cropping, and scaling, enabling the model to gradually focus on information-dense areas from coarse to fine granularity. This coarse-to-fine perception approach not only enhances the model's understanding of visual information but also significantly improves retrieval efficiency.

During training, VRAG-RL employs a multi-expert sampling strategy that combines the reasoning capabilities of large-scale models with the precise annotation capabilities of expert models. This allows the model to learn more effective visual perception strategies. Additionally, its fine-grained reward mechanism integrates factors such as retrieval efficiency, pattern consistency, and generation quality, guiding the model to continuously optimize the retrieval and reasoning paths during interactions with search engines. This multi-dimensional reward mechanism achieves bidirectional driving of retrieval and reasoning, forming a closed-loop optimization.

VRAG-RL also introduces the industry-leading GRPO algorithm, which simulates real-world application scenarios by deploying a local search engine, achieving zero-cost search engine calls and more efficient model training. This training approach not only enhances the model's generalization ability but also enables it to excel in various domains and types of visual tasks.

Experimental results demonstrate that VRAG-RL has achieved significantly better performance than existing methods on multiple visual language benchmark datasets, covering task types from single-hop to multi-hop reasoning, pure text understanding, chart recognition, and complex layout parsing in visually rich scenarios. VRAG-RL outperforms both traditional prompt-based methods and reinforcement learning-based methods, showcasing its superior comprehensive performance.

Moreover, VRAG-RL supports multi-round interactions, allowing it to gradually focus on information-dense areas during the reasoning stage, achieving coarse-to-fine information acquisition. By optimizing retrieval efficiency and reasoning paths, this method significantly enhances the model's performance on visual tasks while maintaining high efficiency.

The VRAG-RL framework is now available on Github: github.com/Alibaba-NLP/VRAG, providing a powerful tool for AI researchers and developers to tackle complex visual reasoning challenges and unlock new possibilities in the field.